If you have basic idea of storm and taking your step to try out its drpc feature, I would suggest you should read this before you do that.

Why we came to an idea of using drpc in storm.

Of Course this was not plan A when we started with our project which had complex calculations to be done with high throughput.

The requirements in mind were

1. should not lose any request that come into storm.

2. should be able to generate the results in real time.

As storm is known for its stream processing capabilities, our attention was on the request loss factor. So a messaging queue was required which would act as shock absorb-er when a request flood happens. And we selected kafka.

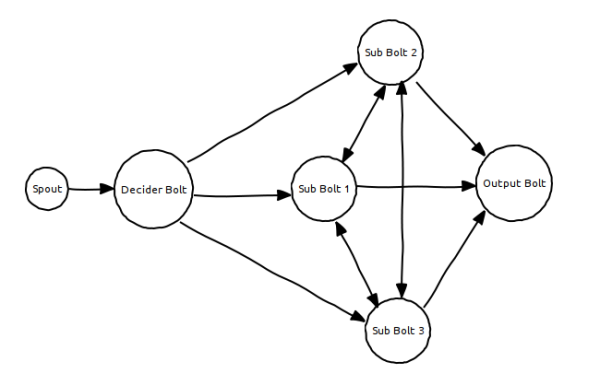

A week later, we had our topology built on storm with kafka as our spout. Requests were getting processed and the loss of requests were minimal. Issue was the processing time the storm was taking.

It had a delay of 1.8 seconds to generate a result and store to cache when we inserted a request into kafka which was absolutely unacceptable.

We did inspect on why this delay is happening in the result generation. And in storm ui the message processing time taken by bolts in our topology were absolute minimal like in single digit milliseconds. So naturally the suspicion went on kafka as it is a persistent messaging queue which would have a delay of writing and reading from the disk.

Why processing by storm-kafka combination took 1.8 seconds?

The conclusion we could arrive at in the end is below.

The contribution in 1.8 seconds taken by the daemon goes to :

1. write time into file system while a message is produced into kafka.

2. read time from storm spout for the same message to be read from kafka.

3. and time taken by the complex calculations in other bolts to occur.

So in which storm ui was giving the values of time taken by each and every bolt. So the rest went to kafka’s bag. And it was too much of time that we cannot tolerate for a real time requirement.

Red card to Kafka. So we are left with two options to use storm.

1. replace kafka with an in memory queue

2. use drpc feature of storm and directly execute the query on topology.

So the first option we tried out is drpc.

Taking our first steps with storm drpc.

For anyone who would search on google for “storm drpc” on google, the first result that would come is the storm wiki which tells you about drpc.

Issues we come across while building our topology for drpc calls.

1. The storm wiki tells about implementing the topology with LinearDPRCTopologyBuilder in normal storm and not on trident. But linear drpc topologies are deprecated as of now. So what should we do? We have to use trident.

2. We should have a drpc client written in the language we are going to make the drpc calls to storm. We had to make those calls in php which we could not find when we searched over the net.

Some client implementations we could find were.

Writing a storm drpc client in php

We found this question on storm-user and we ourselves put a question on stackoverflow. We dint get any proper implementations of storm drpc with php as client. So we decided to write the client by mocking up the other implementations and published the below repo.

https://github.com/mithunsatheesh/php-drpc

which was working for our our purpose.

Topology ported to trident and php client made? Now what else?

We had made our topology in trident with drpc calls. Now its time to make the jar and test in on the cluster. Before doing that make sure you start the drpc on storm cluster by doing below command.

storm drpc

If you are looking for trident drpc sample topologies the below links to storm starter project should really help.

Still not working for you? Check the below points:

1. While submitting to cluster, we have to have the below line

topology.newDRPCStream(“words”, null)

as null only and not a local drpc instance as you see in the local test mode samples.

2. You have to emit from the last bolt and that would be obtained as result in the drpc client call.

3. Always check the log folders of storm path for the exact error.

Did DRPC solve the problem for us?

No. We had the drpc calls working on storm and had shifted the project from kafka-storm to a drpc trident solution. But the end result was it was not satisfactory. The kafka solution ensured that we process all the messages but had delay in processing. But with drpc the case was that it was behaving unexpectedly. Its like sometimes it would give you a correct result and at sometimes it would give a null response. This behavior tempted us to drop the drpc solution even before we started with a load test on it.

And you know storm drpc has a memory leak issue open?

We came across this open issue in storm drpc. Which says:

“When a drpc request timeout, it is not removed from the queue of drpc server. This will cause memory leak.”. which will eventually lead the storm to crash.

It means if at any point of time a processing gets timed out in storm drpc server, the allocated resources are not freed and it would lead to a daemon crash.

Anything wrong to your reasoning? We should discuss it.

These are very beginner level conclusions that I arrived at with my minimal experience on storm and even on java. So if you think any of the above facts are wrong or even right, feel free to comment below so that we could get more clarity on the same. I put up all these together so that a new person who is going to try his luck on drpc should know all these in advance.